Every SOC depends on clear, actionable security event logs, but the drive for richer visibility often collides with the reality of ballooning security log volume.

Each new detection model or compliance requirement demands more context inside those security logs – more attributes, more correlations, more metadata stitched across systems. It feels necessary: better-structured security event logs should make analysts faster and more confident.

So teams continue enriching. More lookups, more tags, more joins. And for a while, enriched security logs do make dashboards cleaner and investigations more dynamic.

Until they don’t. Suddenly ingestion spikes, storage costs surge, queries slow, and pipelines become brittle. The very effort to improve security event logs becomes the source of operational drag.

This is the paradox of modern security telemetry: the more intelligence you embed in your security logs, the more complex – and costly – they become to manage.

When “More” Stops Meaning “Better”

Security operations once had a simple relationship with data — collect, store, search.

But as threats evolved, so did telemetry. Enrichment pipelines began adding metadata from CMDBs, identity stores, EDR platforms, and asset inventories. The result was richer security logs but also heavier pipelines that cost more to move, store, and query.

The problem isn’t the intention to enrich; it’s the assumption that context must always travel with the data.

Every enrichment field added at ingest is replicated across every event, multiplying storage and query costs. Multiply that by thousands of devices and constant schema evolution, and enrichment stops being a force multiplier; it becomes a generator of noise.

Teams often respond by trimming retention windows or reducing data granularity, which helps costs but hurts detection coverage. Others try to push enrichment earlier at the edge, a move that sounds efficient until it isn’t.

Rethinking Where Context Belongs

Most organizations enrich at the ingest layer, adding hostnames, geolocation, or identity tags to logs as they enter a SIEM or data platform. It feels efficient, but at scale it’s where volume begins to spiral. Every added field replicates millions of times, and what was meant to make data smarter ends up making it heavier.

The issue isn’t enrichment, it’s how rigidly most teams apply it.

Instead of binding context to every raw event at source, modern teams are moving toward adaptive enrichment, a model where context is linked and referenced, not constantly duplicated.

This is where agentic automation changes the enrichment pattern. AI-driven data agents, like Cruz, can learn what context actually adds analytical value, enrich only when necessary, and retain semantic links instead of static fields.

The result is the same visibility, far less noise, and pipelines that stay efficient even as data models and detection logic evolve.

In short, the goal isn’t to enrich everything faster. It’s to enrich smarter — letting context live where it’s most impactful, not where it’s easiest to apply.

The Architecture Shift: From Static Fields to Dynamic Context

In legacy pipelines, enrichment is a static process. Rules are predefined, transformations are rigid, and every event that matches a condition gets the same expanded schema.

But context isn’t static.

Asset ownership changes. Threat models evolve. A user’s role might shift between departments, altering the meaning of their access logs overnight.

A static enrichment model can’t keep up, it either lags behind or floods the system with stale attributes.

A dynamic enrichment architecture treats context as a living layer rather than a stored attribute. Instead of embedding every data point into every security log, it builds relationships — lightweight references between data entities that can be resolved on demand.

Think of it as context caching: pipelines tag logs with lightweight identifiers and resolve details only when needed. This approach doesn’t just cut cost, it preserves contextual integrity. Analysts can trust that what they see reflects the latest known state, not an outdated enrichment rule from last quarter.

The Hidden Impact on Security Analytics

When context is over-applied, it doesn’t just bloat data — it skews analytics.

Correlation engines begin treating repeated metadata as signals. That rising noise floor buries high-fidelity detections under patterns that look relevant but aren’t.

Detection logic slows down. Query times stretch. Mean time to respond increases.

Adaptive enrichment, in contrast, allows the analytics layer to focus on relationships instead of repetition. By referencing context dynamically, queries run faster and correlation logic becomes more precise, operating on true signal, not replicated metadata.

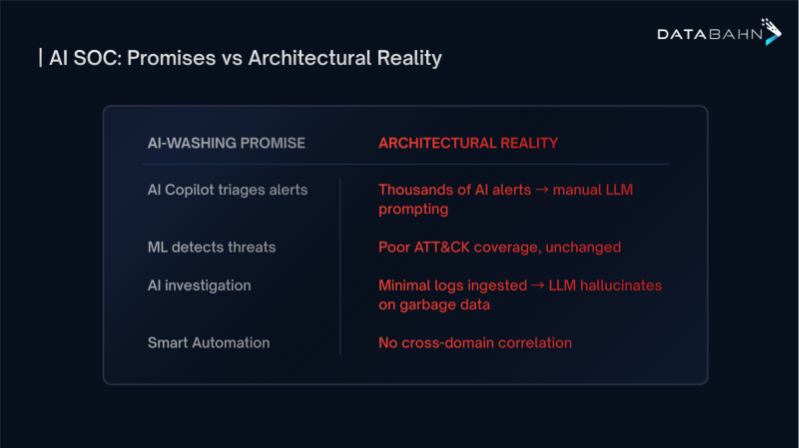

This becomes especially relevant for SOCs experimenting with AI-assisted triage or LLM-powered investigation tools. Those models thrive on semantically linked data, not redundant payloads.

If the future of SOC analytics is intelligent automation, then data enrichment has to become intelligent too.

Why This Matters Now

The urgency is no longer hypothetical.

Security data platforms are entering a new phase of stress. The move to cloud-native architectures, the rise of identity-first security, and the integration of observability data into SIEM pipelines have made enrichment logic both more critical and more fragile.

Each system now produces its own definition of context, endpoint, identity, network, and application telemetry all speak different schemas. Without a unifying approach, enrichment becomes a patchwork of transformations, each one slightly out of sync.

The result? Gaps in detection coverage, inconsistent normalization, and a steady growth of “dark data” — security event logs so inflated or malformed that they’re excluded from active analysis.

A smarter enrichment strategy doesn’t just cut cost; it restores semantic cohesion — the shared meaning across security data that makes analytics work at all.

Enter the Agentic Layer

Adaptive enrichment becomes achievable when pipelines themselves learn.

Instead of following static transformation rules, agents observe how data is used and evolve the enrichment logic accordingly.

For example:

- If a certain field consistently adds value in detections, the agent prioritizes its inclusion.

- If enrichment from a particular source introduces redundancy or schema drift, it learns to defer or adjust.

- When new data sources appear, the agent aligns their structure dynamically with existing models, avoiding constant manual tuning.

This transforms enrichment from a one-time process into a self-correcting system, one that continuously balances fidelity, performance, and cost.

A More Sustainable Future for Security Data

In the next few years, CISOs and data leaders will face a deeper reckoning with their telemetry strategies.

Data volume will keep climbing. AI-assisted investigations will demand cleaner, semantically aligned data. And cost pressures will force teams to rethink not just where data lives, but how meaning is managed.

The future of enrichment isn’t about adding more fields.

It’s about building systems that understand when and why context matters, and applying it with precision rather than abundance.

By shifting from rigid enrichment at ingest to adaptive, agentic enrichment across the pipeline, enterprises gain three crucial advantages:

- Efficiency: Less duplication and storage overhead without compromising visibility.

- Agility: Faster evolution of detection logic as context relationships stay dynamic.

- Integrity: Context always reflects the present state of systems, not outdated metadata.

This is not a call to collect less — it’s a call to collect more wisely.

The Path Forward

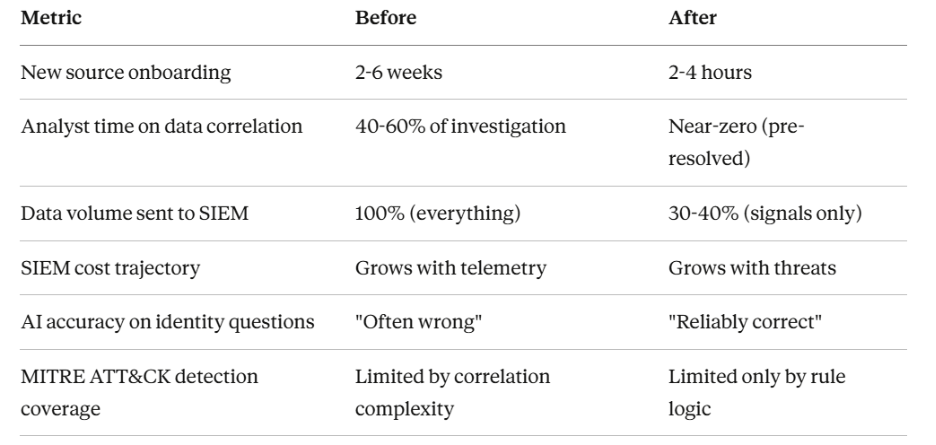

At Databahn, this philosophy is built into how the platform treats data pipelines, not as static pathways, but as adaptive systems that learn. Our agentic data layer operates across the pipeline, enriching context dynamically and linking entities without multiplying volume. It allows enterprises to unify security and observability data without sacrificing control, performance, or cost predictability.

In modern security, visibility isn’t about how much data you collect — it’s about how intelligently that data learns to describe itself.

.avif)

.png)

.png)

.avif)

.avif)