Connecting Splunk to value (and data)

Future-proof the world's most beloved SIEM solution with DataBahn's Security Data Fabric. Optimize ingestion and compute, control workload costs, and save on data engineering effort.

Achieve awesomeness with Splunk and DataBahn

Enterprise SOCs love their Splunk with its learned context, powerful analytics, and fast search with end-to-end visibility across large data volumes.

With DataBahn, SOCs can seamlessly collect and optimize data ingestion at velocity, ease strain on your budget and infrastructure, and reducing your Splunk workload costs by ~50% while enhancing data operations

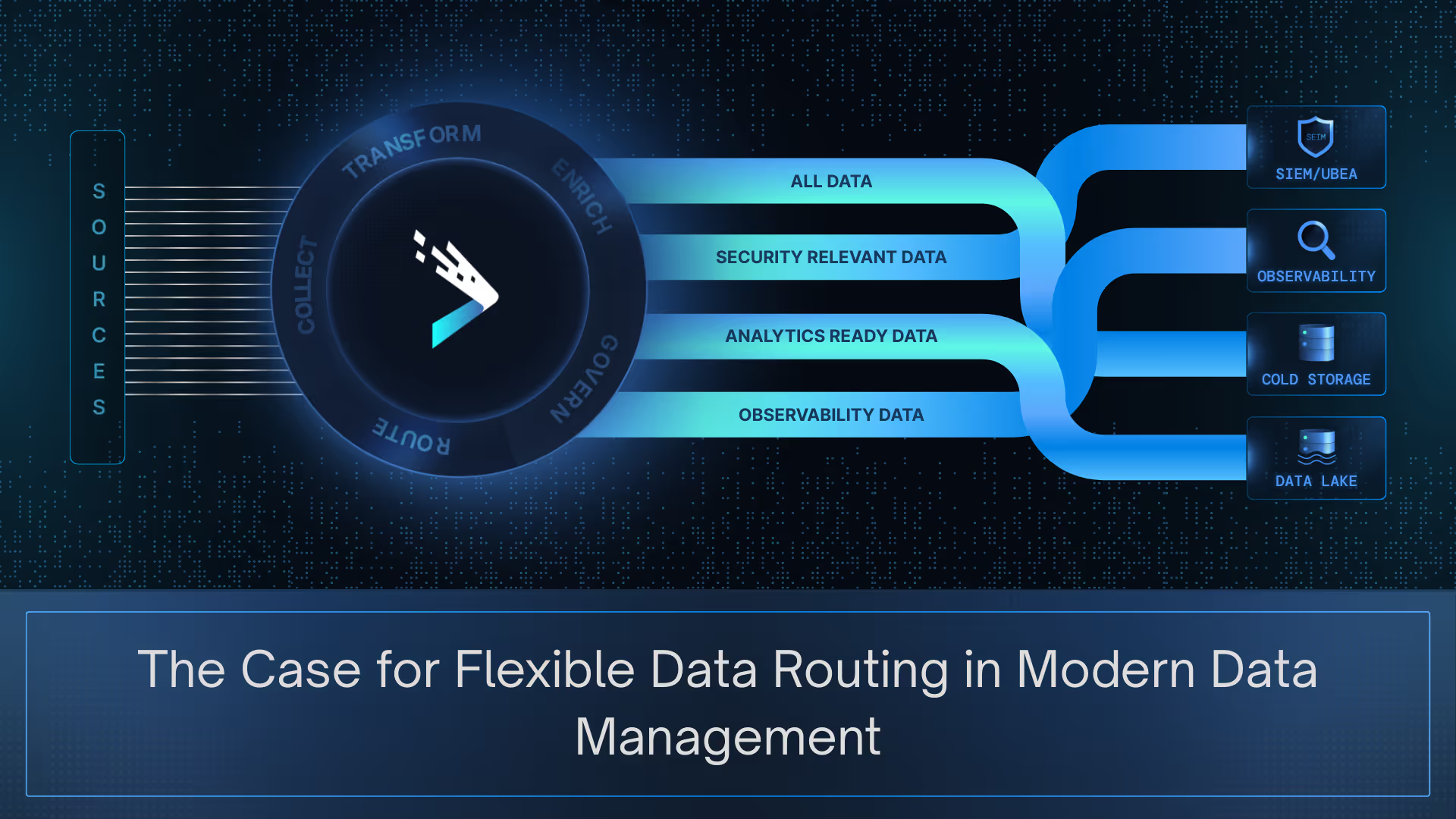

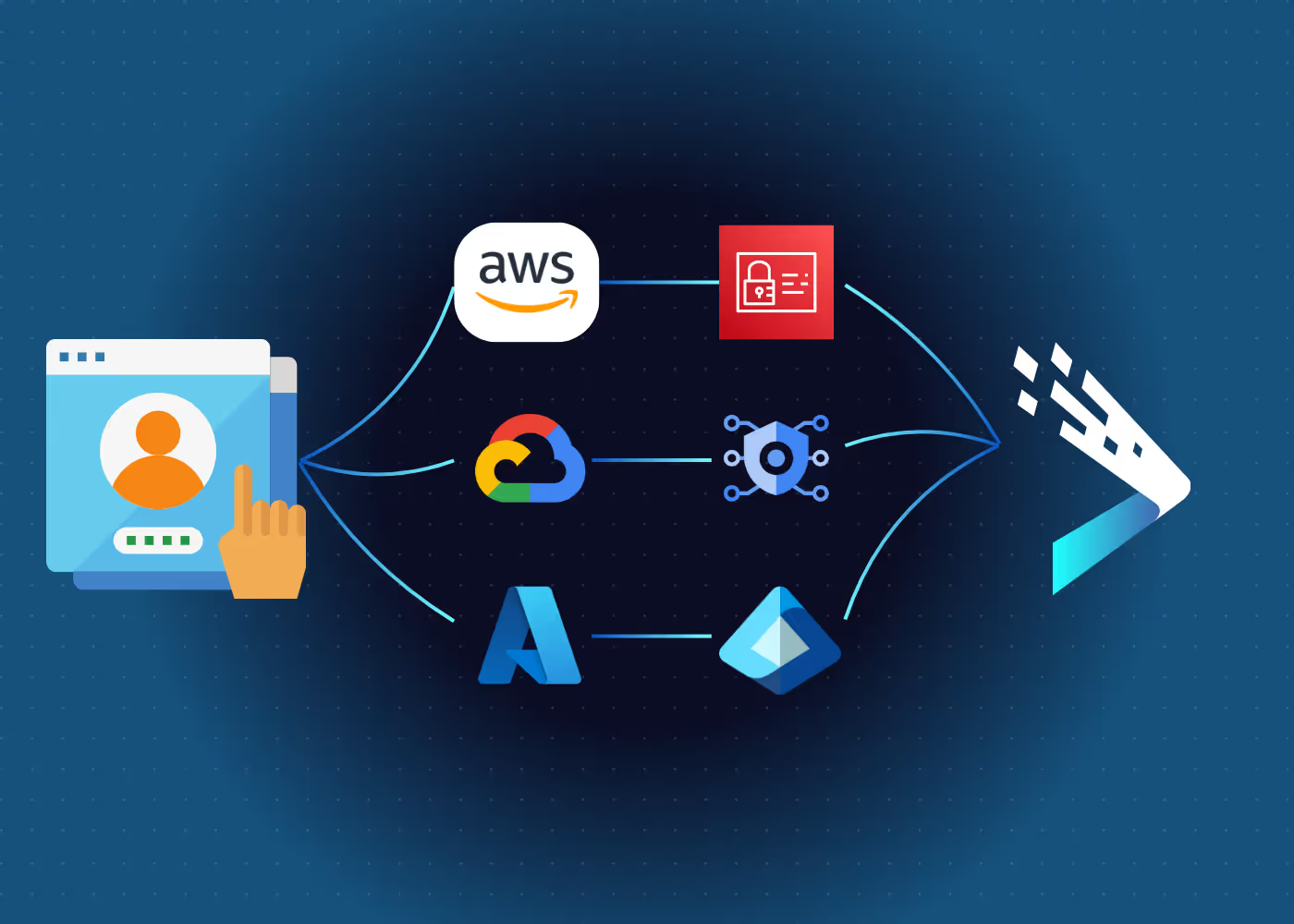

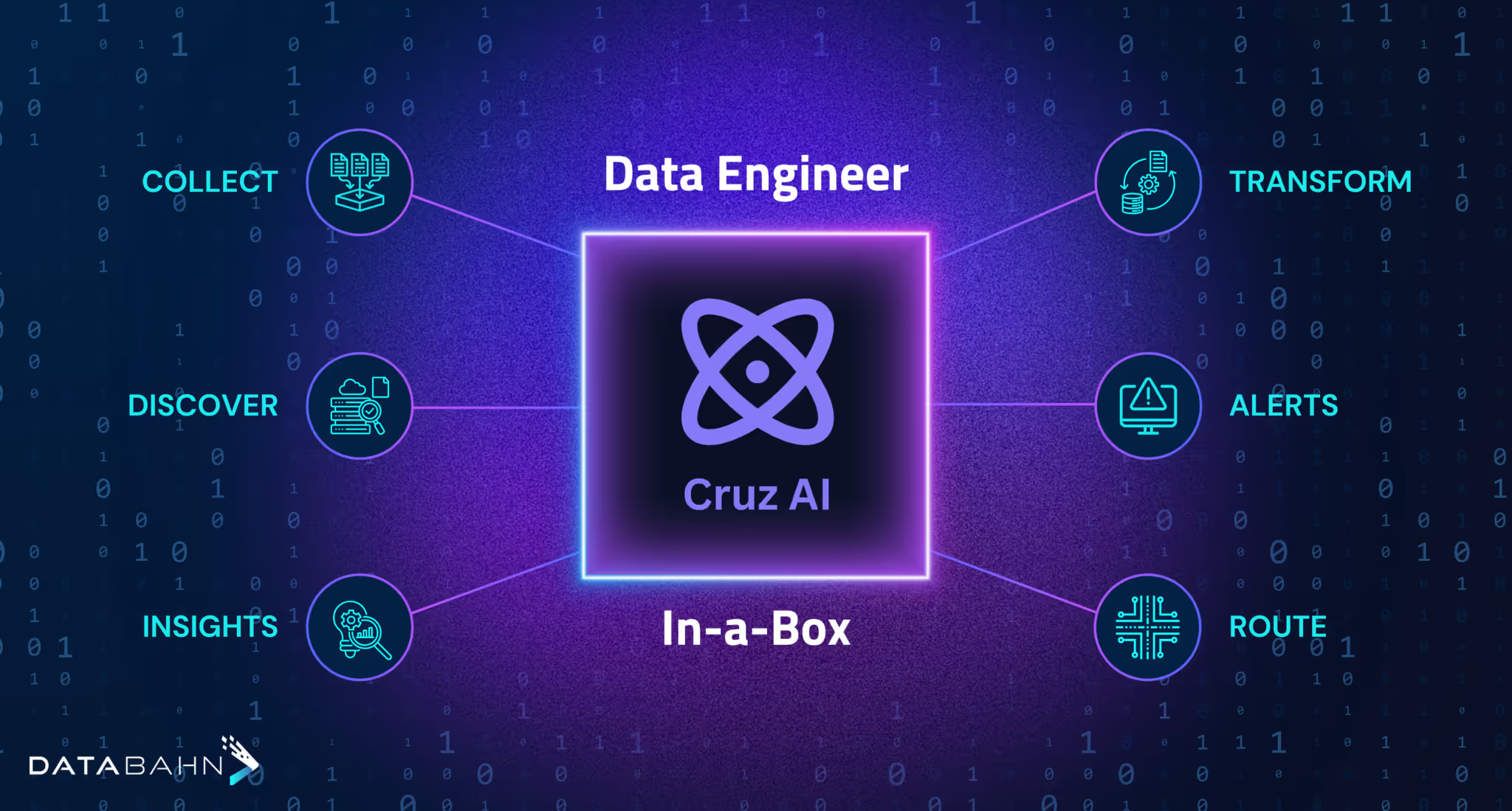

Plug-and-Play connectors and AI-powered auto-parsing of custom apps and microservices

Optimize data ingestion and routing while controlling costs and optimize workloads

Reduction in manual effort in data parsing & transformation

Supercharge your Splunk SIEM

Your starting point for all things Databahn

Have Questions?

Here's what we hear often

Ready to accelerate towards Data Utopia?

Experience the speed, simplicity, and power of our AI-powered data fabric platform.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

%20(5).avif)

.avif)

%20(3).avif)

.avif)

.avif)

.avif)

.avif)