Reduce Alert Fatigue in Microsoft Sentinel

AI-powered log prioritization delivers 50% log volume reduction

Microsoft Sentinel has rapidly become the go-to SIEM for enterprises needing strong security monitoring and advanced threat detection. A Forrester study found that companies using Microsoft Sentinel can achieve up to a 234% ROI. Yet many security teams fall short, drowning in alerts, rising ingestion costs, and missed threats.

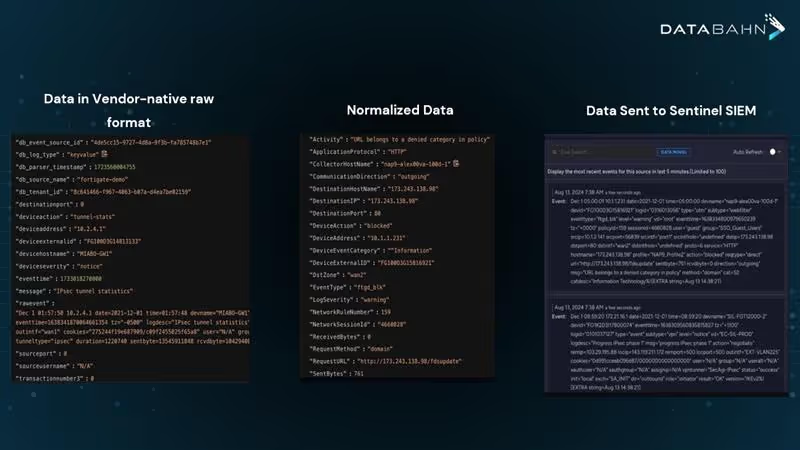

The issue isn’t Sentinel itself, but the raw, unfiltered logs flowing into it.

As organizations bring in data from non-Microsoft sources like firewalls, networks, and custom apps, security teams face a flood of noisy, irrelevant logs. This overload leads to alert fatigue, higher costs, and increased risk of missing real threats.

AI-powered log ingestion solves this by filtering out low-value data, enriching key events, and mapping logs to the right schema before they hit Sentinel.

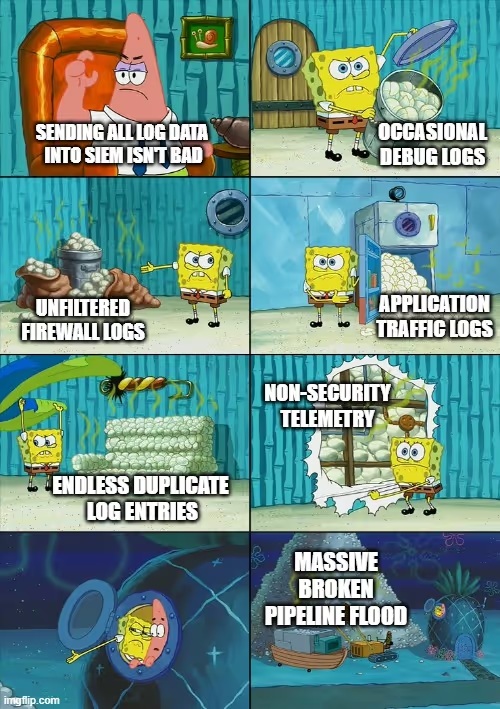

Why Security Teams Struggle with Alert Overload (The Log Ingestion Nightmare)

According to recent research by DataBahn, SOC analysts spend nearly 2 hours daily on average chasing false positives. This is one of the biggest efficiency killers in security operations.

Solutions like Microsoft Sentinel promise full visibility across your environment. But on the ground, it’s rarely that simple.

There’s more data. More dashboards. More confusion. Here are two major reasons security teams struggle to see beyond alerts on Sentinel.

- Built for everything, overwhelming for everyone

Microsoft Sentinel connects with almost everything: Azure, AWS, Defender, Okta, Palo Alto, and more.

But more integrations mean more logs. And more logs mean more alerts.

Most organizations rely on default detection rules, which are overly sensitive and trigger alerts for every minor fluctuation.

Unless every rule, signal, and threshold is fine-tuned (and they rarely are), these alerts become noise, distracting security teams from actual threats.

Tuning requires deep KQL expertise and time.

For already stretched-thin teams, spending days fine-tuning detection rules (with accuracy) is unsustainable.

It gets harder when you bring in data from non-Microsoft sources like firewalls, network tools, or custom apps.

Setting up these pipelines can take 4 to 8 weeks of engineering work, something most SOC teams simply don’t have the bandwidth for.

- Noisy data in = noisy alerts out

Sentinel ingests logs from every layer, including network, endpoints, identities, and cloud workloads. But if your data isn’t clean, normalized, or mapped correctly, you’re feeding garbage into the system. What comes out are confusing alerts, duplicates, and false positives. In threat detection, your log quality is everything. If your data fabric is messy, your security outcomes will be too.

The Cost Is More Than Alert Fatigue

False alarms don’t just wear down your security team. They can also burn through your budget. When you're ingesting terabytes of logs from various sources, data ingestion costs can escalate rapidly.

Microsoft Sentinel's pricing calculator estimates that ingesting 500 GB of data per day can cost approximately $525,888 annually. That’s a discounted rate.

While the pay-as-you-go model is appealing, without effective data management, costs can grow unnecessarily high. Many organizations end up paying to store and process redundant or low-value logs. This adds both cost and alert noise. And the problem is only growing. Log volumes are increasing at a rate of 25%+ year over year, which means costs and complexity will only continue to rise if data isn’t managed wisely. By filtering out irrelevant and duplicate logs before ingestion, you can significantly reduce expenses and improve the efficiency of your security operations.

What’s Really at Stake?

Every security leader knows the math: reduce log ingestion to cut costs and reduce alert fatigue. But what if the log you filter out holds the clue to your next breach?

For most teams, reducing log ingestion feels like a gamble with high stakes because they lack clear insights into the quality of their data. What looks irrelevant today could be the breadcrumb that helps uncover a zero-day exploit or an advanced persistent threat (APT) tomorrow. To stay ahead, teams must constantly evaluate and align their log sources with the latest threat intelligence and Indicators of Compromise (IOCs). It’s complex. It’s time-consuming. Dashboards without actionable context provide little value.

"Security teams don’t need more dashboards. They need answers. They need insights."

— Mihir Nair, Head of Architecture & Innovation at DataBahn

These answers and insights come from advanced technologies like AI.

Intercept The Next Threat With AI-Powered Log Prioritization

According to IBM’s cost of a data breach report, organizations using AI reported significantly shorter breach lifecycles, averaging only 214 days.

AI changes how Microsoft Sentinel handles data. It analyzes incoming logs and picks out the relevant ones. It filters out redundant or low-value logs.

Unlike traditional static rules, AI within Sentinel learns your environment’s normal behavior, detects anomalies, and correlates events across integrated data sources like Azure, AWS, firewalls, and custom applications. This helps Sentinel find threats hidden in huge data streams. It cuts down the noise that overwhelms security teams. AI also adds context to important logs. This helps prioritize alerts based on true risk.

In short, alert fatigue drops. Ingestion costs go down. Detection and response speed up.

Why Traditional Log Management Hampers Sentinel Performance

The conventional approach to log management struggles to scale with modern security demands as it relies on static rules and manual tuning. When unfiltered data floods Sentinel, analysts find themselves filtering out noise and managing massive volumes of logs rather than focusing on high-priority threats. Diverse log formats from different sources further complicate correlation, creating fragmented security narratives instead of cohesive threat intelligence.

Without this intelligent filtering mechanism, security teams become overwhelmed, significantly increasing false positives and alert fatigues that obscures genuine threats. This directly impacts MTTR (Mean Time to Respond), leaving security teams constantly reacting to alerts rather than proactively hunting threats.

The key to overcoming these challenges lies in effectively optimizing how data is ingested, processed, and prioritized before it ever reaches Sentinel. This is precisely where DataBahn’s AI-powered data pipeline management platform excels, delivering seamless data collection, intelligent data transformation, and log prioritization to ensure Sentinel receives only the most relevant and actionable security insights.

AI-driven Smart Log Prioritization is the Solution

Reducing Data Volume and Alert Fatigue by 50% while Optimizing Costs

By implementing intelligent log prioritization, security teams achieve what previously seemed impossible—better security visibility with less data. DataBahn's precision filtering ensures only high-quality, security-relevant data reaches Sentinel, reducing overall volume by up to 50% without creating visibility gaps. This targeted approach immediately benefits security teams by significantly reducing alert fatigues and false positives as alert volume drops by 37% and analysts can focus on genuine threats rather than endless triage.

The results extend beyond operational efficiency to significant cost savings. With built-in transformation rules, intelligent routing, and dynamic lookups, organizations can implement this solution without complex engineering efforts or security architecture overhauls. A UK-based enterprise consolidated multiple SIEMs into Sentinel using DataBahn’s intelligent log prioritization, cutting annual ingestion costs by $230,000. The solution ensured Sentinel received only security-relevant data, drastically reducing irrelevant noise and enabling analysts to swiftly identify genuine threats, significantly improving response efficiency.

Future-Proofing Your Security Operations

As threat actors deploy increasingly sophisticated techniques and data volumes continue growing at 28% year-over-year, the gap between traditional log management and security needs will only widen. Organizations implementing AI-powered log prioritization gain immediate operational benefits while building adaptive defenses for tomorrow's challenges.

This advanced technology by DataBahn creates a positive feedback loop: as analysts interact with prioritized alerts, the system continuously refines its understanding of what constitutes a genuine security signal in your specific environment. This transforms security operations from reactive alert processing to proactive threat hunting, enabling your team to focus on strategic security initiatives rather than data management.

Conclusion

The question isn't whether your organization can afford this technology—it's whether you can afford to continue without it as data volumes expand exponentially. With DataBahn’s intelligent log filtering, organizations significantly benefit by reducing alert fatigue, maximizing the potential of Microsoft Sentinel to focus on high-priority threats while minimizing unnecessary noise. After all, in modern security operations, it’s not about having more data—it's about having the right data.

Watch this webinar featuring Davide Nigro, Co-Founder of DOTDNA, as he shares how they leveraged DataBahn to significantly reduce data overload optimizing Sentinel performance and cost for one of their UK-based clients.

.png)

.png)

.png)