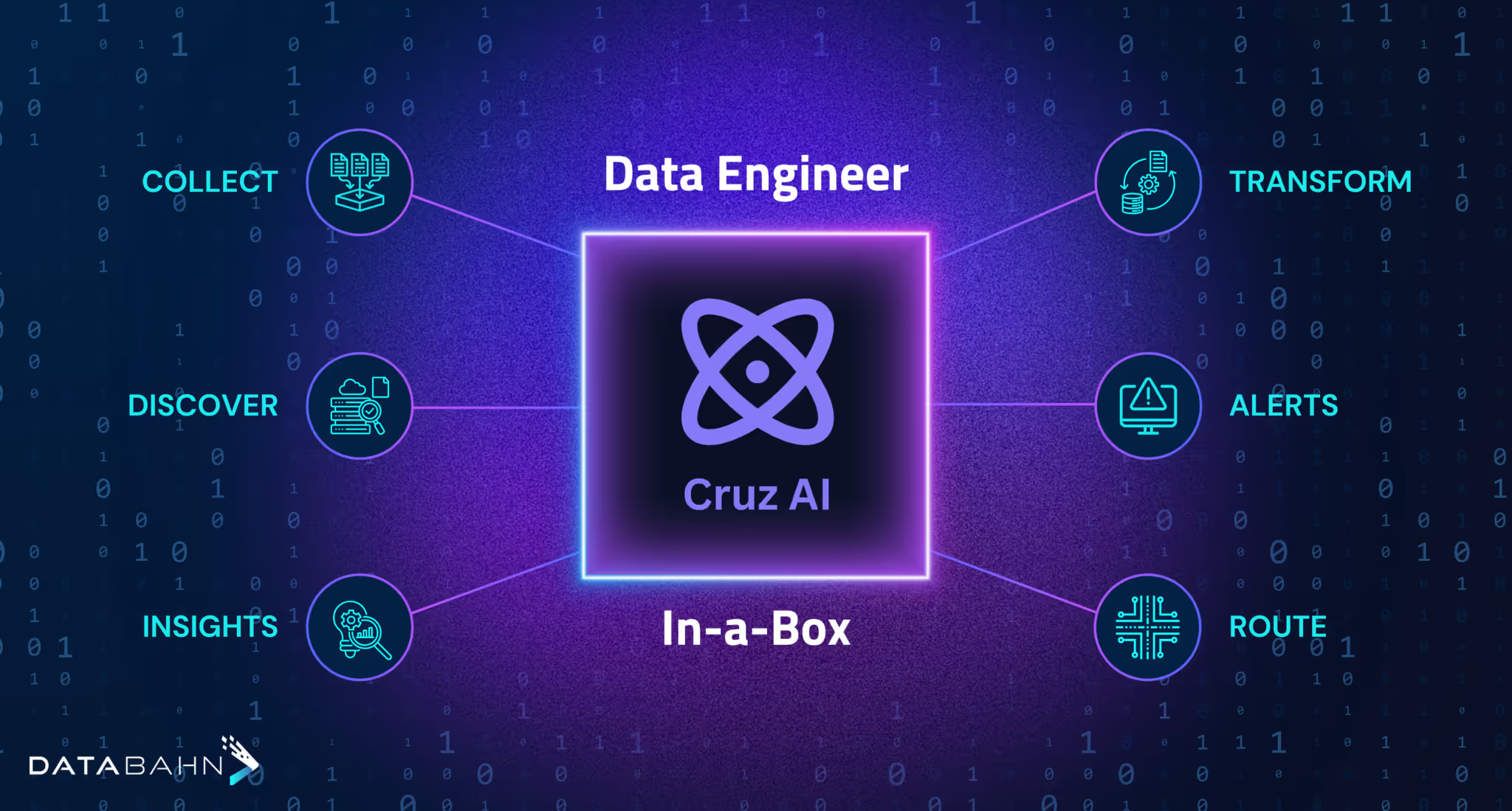

Introducing Cruz: An AI Data Engineer In-a-Box

Why we built it and what it does

Artificial Intelligence is perceived as a panacea for modern business challenges with its potential to unlock greater efficiency, enhance decision-making, and optimize resource allocation. However, today’s commercially-available AI solutions are reactive – they assist, enhance analysis, and bolster detection, but don’t act on their own. With the explosion of data from cloud applications, IoT devices, and distributed systems, data teams are burdened with manual monitoring, complex security controls, and fragmented systems that demand constant oversight. What they really need is more than an AI copilot, but a complementary data engineer that takes over all the exhausting work and freeing them up for more strategic data and security work.

That’s where we saw an opportunity. The question that inspired us: How do we transform the way organizations approach data management? The answer led us to Cruz—not just another AI tool, but an autonomous AI data engineer that monitors, detects, adapts, and actively resolves issues with minimal human intervention.

Why We Built Cruz

Organizations face unprecedented challenges in managing vast amounts of data across multiple systems. From integration headaches to security threats, data engineers and security teams are under immense pressure to keep pace with evolving data risks. These challenges extend beyond mere volume—they strike at the effectiveness, security, and real-time insight generation.

- Integration Complexity

Data ecosystems are expanding, encompassing diverse tools and platforms—from SIEMs to cloud infrastructure, data lakes, and observability tools. The challenge lies in integrating these disparate systems to achieve unified visibility without compromising security or efficiency. Data teams often spend days or even weeks developing custom connections, which then require continuous monitoring and maintenance.

- Disparate Data Formats

Data is generated in varied formats—from logs and alerts to metrics and performance data—making it difficult to maintain quality and extract actionable insights. Compounding this challenge, these formats are not static; schema drifts and unexpected variations further complicate data normalization.

- The Cost of Scaling and Storage

With data growing exponentially, organizations struggle with storage, retrieval, and analysis costs. Storing massive amounts of data inflates SIEM and cloud storage costs, while manually filtering out data without loss is nearly impossible. The challenge isn’t just about storage—it’s about efficiently managing data volume while preserving essential information.

- Delayed and Inconsistent Insights

Even after data is properly integrated and parsed, extracting meaningful insights is another challenge. Overwhelming volumes of alerts and events make it difficult for data teams to manually query and review dashboards. This overload delays insights, increasing the risk of missing real-time opportunities and security threats.

These challenges demand excessive manual effort—updating normalization, writing rules, querying data, monitoring, and threat hunting—leaving little time for innovation. While traditional AI tools improve efficiency by automating basic tasks or detecting predefined anomalies, they lack the ability to act, adapt, and prioritize autonomously.

What if AI could do more than assist? What if it could autonomously orchestrate data pipelines, proactively neutralize threats, intelligently parse data, and continuously optimize costs? This vision drove us to build Cruz to be an AI system that is context-aware, adaptive, and capable of autonomous decision-making in real time.

Cruz as Agentic AI: Informed, Perceptive, Proactive

Traditional data management solutions are struggling to keep up with the complexities of modern enterprises. We needed a transformative approach—one that led us to agentic AI. Agentic AI represents the next evolution in artificial intelligence, blending sophisticated reasoning with iterative planning to autonomously solve complex, multi-step problems. Cruz embodies this evolution through three core capabilities: being informed, perceptive, and proactive.

Informed Decision-Making

Cruz leverages Retrieval-Augmented Generation (RAG), to understand complex data relationships and maintain a holistic view of an organization’s data ecosystem. By analyzing historical patterns, real-time signals, and organizational policies, Cruz goes beyond raw data analysis to make intelligent, autonomous decisions enhancing efficiency and optimization.

Perceptive Analysis

Cruz’s perceptive intelligence extends beyond basic pattern detection. It recognizes hidden correlations across diverse data sources, differentiates between routine fluctuations and critical anomalies, and dynamically adjusts its responses based on situational context. This deep awareness ensures smarter, more precise decisions without requiring constant human intervention.

Proactive Intelligence

Rather than waiting for issues to emerge, Cruz actively monitors data environments, anticipating potential challenges before they impact operations. It identifies optimization opportunities, detects anomalies, and initiates corrective actions autonomously while continuously evolving to deliver smarter and more effective data management over time.

Redefining Data Management with Autonomous Intelligence

Modern data environments are complex and constantly evolving, requiring more than just automation. Cruz’s agentic capabilities redefine how organizations manage data by autonomously handling tasks traditionally consuming significant engineering time. For example, when schema drift occurs, traditional tools may only alert administrators, but Cruz autonomously analyzes the data pattern, identifies inconsistencies, and updates normalization in real-time.

Unlike traditional tools that rely on static monitoring, Cruz actively scans your data ecosystem, identifying threats and optimization opportunities before they escalate. Whether it's streamlining data flows, transforming data, or reducing data volume, Cruz executes these tasks autonomously while ensuring data integrity.

Cruz's Core Capabilities

- Plug and Play Integration: Cruz automatically discovers data sources across cloud and on-prem environments, providing a comprehensive data overview. With a single click, Cruz streamlines what would typically be hours of manual setup into a fast, effortless process, ensuring quick and seamless integration with your existing infrastructure.

- Automated Parsing: Where traditional tools stop at flagging issues, Cruz takes the next step. It proactively parses, normalizes, and resolves inconsistencies in real time. It autonomously updates schemas, masks sensitive data, and refines structures—eliminating days of manual engineering effort.

- Real-time AI-driven Insights: Cruz leverages advanced AI capabilities to provide insights that go far beyond human-scale analysis. By continuously monitoring data patterns, it provides real-time insights into performance, emerging trends, volume reduction opportunities, and data quality enhancements, enabling better decision-making and faster data optimization.

- Intelligent Volume Reduction: Cruz actively monitors data environments to identify opportunities for volume reduction by analyzing patterns and creating rules to filter out irrelevant data. For example, it identifies irrelevant fields in logs sent to SIEM systems, eliminating data that doesn't contribute to security insights. Additionally, it filters out duplicate or redundant data, minimizing storage and observability costs while maintaining data accuracy and integrity.

- Automating Analytics: Cruz operates 24/7, continuously monitoring and analyzing data streams in real-time to ensure no insights are missed. With deep contextual understanding, it detects patterns, anticipates potential threats, and uncovers optimization. By automating these processes, Cruz saves engineering hours, minimizes human errors, and ensures data remains protected, enriched, and readily available for actionable insights.

Conclusion

Cruz is more than an AI tool—it’s an AI Data Engineer that evolves with your data ecosystem, continuously learning and adapting to keep your organization ahead of data challenges. By automating complex tasks, resolving issues, and optimizing operations, Cruz frees data teams from the burden of constant monitoring and manual intervention. Instead of reacting to problems, organizations can focus on strategy, innovation, and scaling their data capabilities.

In an era where data complexity is growing, businesses need more than automation—they need an intelligent, autonomous system that optimizes, protects, and enhances their data. Cruz delivers just that, transforming how companies interact with their data and ensuring they stay competitive in an increasingly data-driven world.

With Cruz, data isn’t just managed—it’s continuously improved.

Ready to transform your data ecosystem with Cruz? Learn more about Cruz here.

.png)

.png)

.png)