What on earth is security data fabric, and why do we suddenly need one?

Every time I am at a security conference, a new buzzword is all over most vendors’ signage, one year it was UEBA (User Entity and Behavioral Analytics), next EDR (Endpoint Detection and Response), then XDR (Extended Detection and Response), then it was (ASM) Attack Surface Management. Some of these are truly new and valuable capabilities, some of these are rebranding of an existing capability. Some vendors have something to do with the new capability (i.e., buzzword), and some are just hoping to ride the wave of the hype. This year, we will probably hear a lot on GenAI and cybersecurity, and on the security data fabric. Let me tackle the latter in this article, with another article to follow soon on GenAI and Cybersecurity.

Problem Statement:

Many organizations are dealing with an explosion of security logs directed to the SIEM and other security monitoring systems, Terabytes of data every day!

- How to better manage the growing cost of the security log data collection?

- Do you know if all of this data clogging your SIEM storage has high security value?

- Are you collecting the most relevant security data?

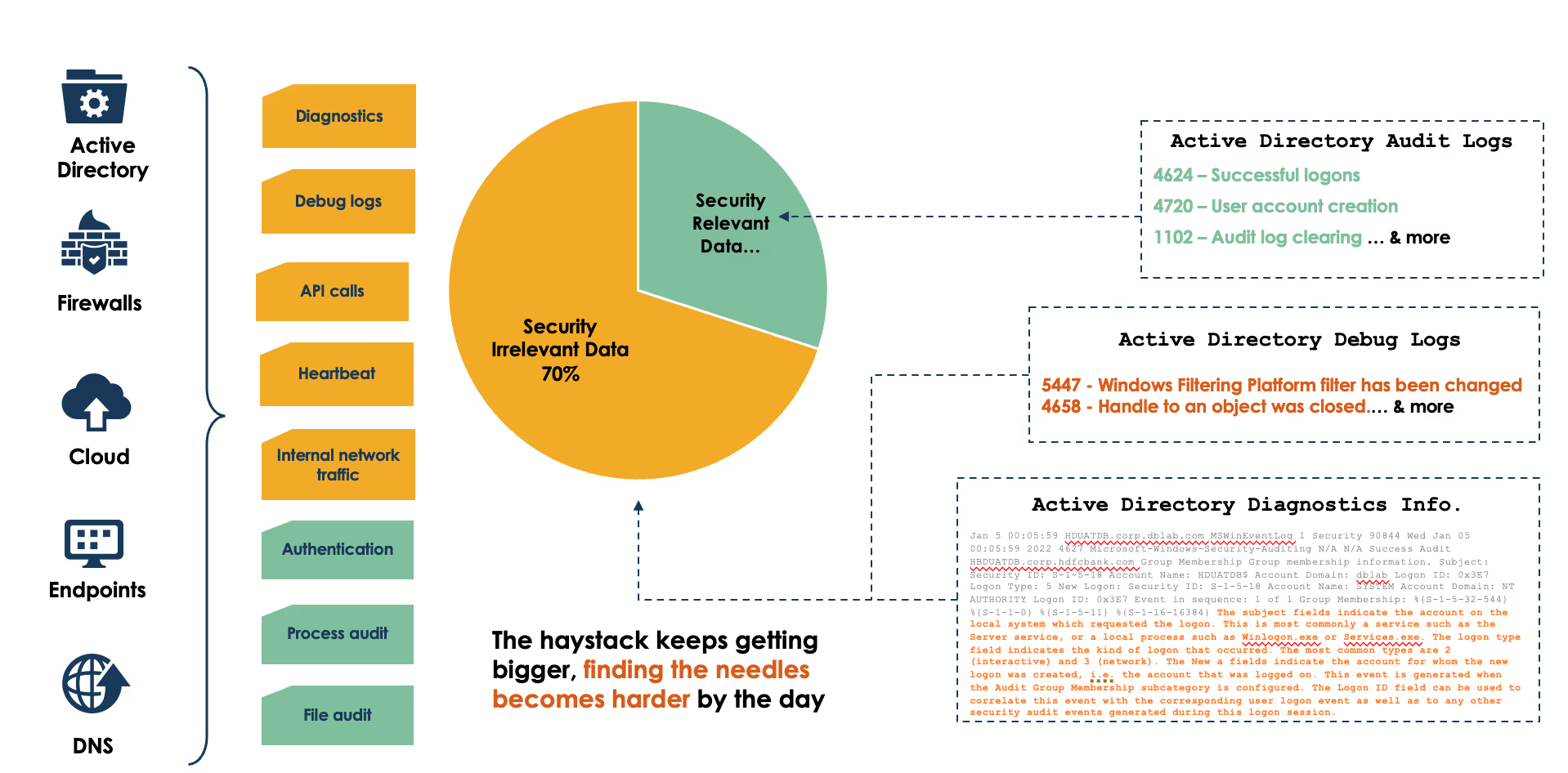

To illustrate, here is an example of windows security events and a view on what elements have high security value compared to the total volume typically collected:

- Do you have genuine visibility into potential security log data duplication and underlying inconsistencies? Is your system able to identify missing security logs and security log schema draft fast enough for your SOC to avoid missing something relevant?

- As SIEM and security analytics capabilities evolve, how do to best decouple security log integration from SIEM and other threat detection platforms to allow not only easier migration to lasted technology but provide cost-effective and seamless access of this security data for threat hunting and other user groups?

- Major Next Gen SIEMs operate on a consumption-based model expecting end users to break down queries by data source and/or narrowed time range; which increases the total # of queries executed and increases your cost significantly!! Major Next-Gen SIEMs operate on a consumption-based model expecting end users to break down queries by data source and/or narrowed time range; which increases the total # of queries executed and increases your cost significantly!!

As security practitioners, we either accepted these issues as the cost of running our SOC, handled some of these issues manually, or hoped that either the cloud and/or SIEM vendors would one day have a better approach to deal with these issues, to no avail. This is why you need a security data fabric.

What is a Security Data Fabric (SDF)?

A data fabric is a solution that connects, integrates, and governs data across different systems and applications. It uses artificial intelligence and metadata automation to create flexible and reusable data pipelines and services. For clarity, a data fabric is simply a set of capabilities that allows you a lot more control of your data end to end, on how this data is ingested and where to forward it and stores it, in service of your business end goals, compared to just collecting and hoarding a heap of data in an expensive data lake, and hoping one day some use will come of it. The security data fabric is meant to tightly couple these principles with deep security expertise and the use of artificial intelligence to allow mastery of your security data and optimize your security monitoring investments and enable enhanced threat detection.

They key outcome of a security data fabric is to allow security teams to focus on their core function (i.e., threat detection) instead of spending countless hours tinkering with data engineering tasks, which means automation, seamless integration and minimal overhead on ongoing operations.

Components of a Security Data Fabric (SDF):

Smart Collection:

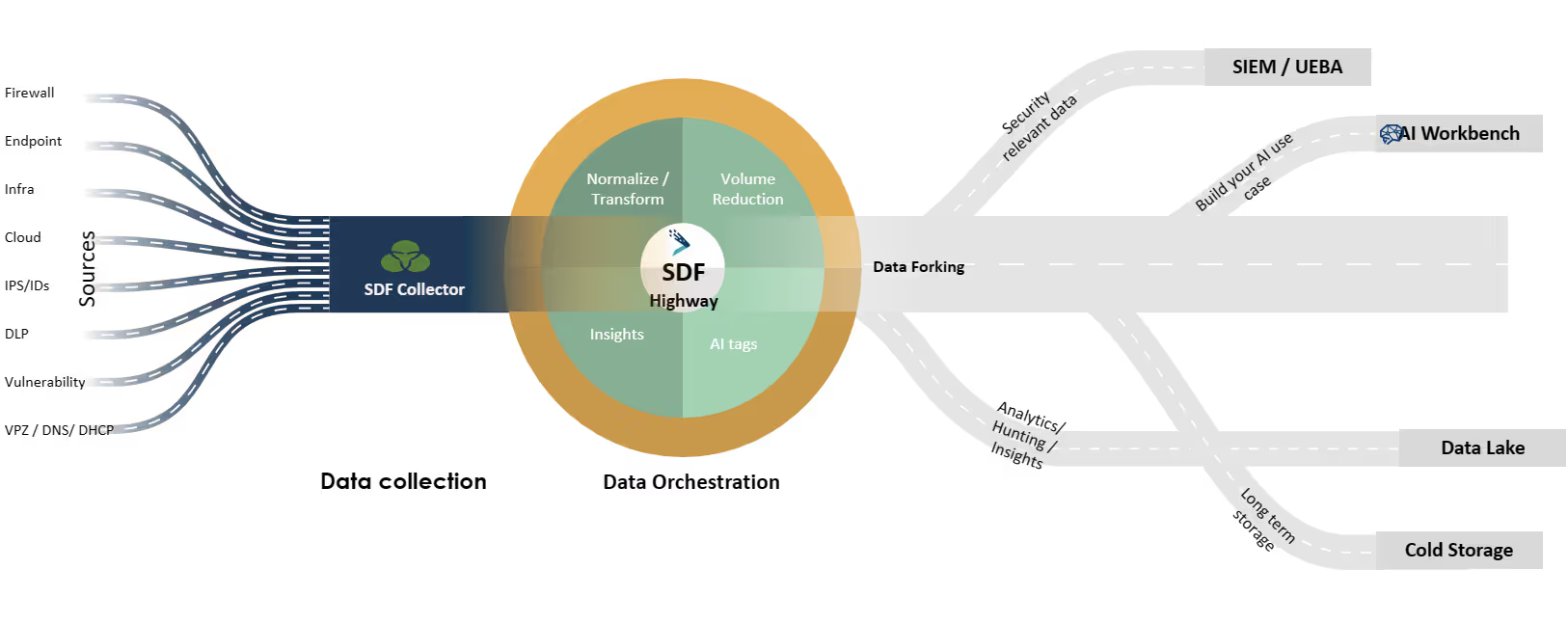

This is meant to decouple the collection of the security data logs from the SIEM/UEBA vendor you are using. This allows the ability to send the relevant security data to the SIEM/UEBA, sending a copy to a security data lake to create additional AI-enabled threat detection use cases (i.e., AI workbench) or to perform threat hunting, and send compliance-related logs to cold storage.

Why important?

- Minimize vendor lock-in and allow your system to leverage this data in various environments and formats, without needing to pay multiple times to use your own security data outside of the SIEM - particularly for requirements such as threat hunting and the creation of advanced threat-detection use cases using AI.

- Eliminate data loss with traditional SIEM log forwarders, syslog relay servers.

- Eliminate custom code/scripts for data collection.

- Reduced data transfer between cloud environments, especially in the case of having a hybrid cloud environment.

Security Data Orchestration:

This is where the security expertise in the security data fabric becomes VERY important. The security data orchestration includes the following elements:

- Normalize, Parse, and Transform: Apply AI and security expertise for seamless normalization, parsing, and transforming of security data into the format you need for ingestion into your SIEM/UEBA tool, such as OCSF, CEF, CIM, or to a security data lake, or other data storage solutions.

- Data Forking: Again, applying AI and security expertise to identify which security logs have the right fields and attributes that have threat detection value and should be sent to the SIEM, and which other logs should be sent straight to cold storage for compliance purposes, as an example.

- Data Lineage and Data Observability: These are well-established capabilities in data management tools. We are applying it here to security data, so we no longer need to wonder if the threat detection rule is not firing because the log source is dead/MIA or because there are no hits. Existing collectors do not always give you visibility for individual log sources (at the level of the Individual device and log attribute/telemetry). This capability solves this challenge.

- Data Quality: Ability to monitor and alert on schema drift and track the consistency, completeness, reliability, and relevance of the security data collected, stored, and used

- Data Enrichment: This is where you start getting exciting value. The security data fabric uses its visibility to all your security data with insights using advanced AI such as:

- Correlate with threat intel showing new CVEs or IoCs impacting your assets, here is how it looks in the MITRE Att&ck kill chain and provides a historical view of the potential presence of these indicators in your environment.

- Recommendations on new threat detection use cases to apply based on your threat profile.

Why important?

- Automation: At face value, existing tools promise some of these capabilities, but they usually need a massive amount of manual effort and deep security expertise to implement. This allows the SOC team to focus on their core function (i.e., threat detection) instead of spending countless hours tinkering with data engineering tasks.

- Volume Reduction: This is the most obvious value of using a security data fabric. You can reduce 30-50% of the data volume being sent to your SIEM by using a security-intelligent data fabric, as it will only forward data that has security value to your SIEM and send the rest to cheaper data storage. Yes, you read this correctly, 30-50% volume reduction! Imagine the cost savings and how much new useful security data you can start sending to your SIEM for enhanced threat detection.

- Enhanced Threat Detection: An SDF will enable the threat-hunting team to run queries more effectively and cheaply by giving them the ability to access a separate data lake, you get full control of your security data, and ongoing enrichments in how to improve your threat detection capabilities. Isn’t this what a security solution is about at the end of the day?

.png)

.png)

.png)

.avif)

.avif)