The SIEM Cost Spiral Security Leaders Face

Imagine if your email provider charged you for every message sent and received, even the junk, the duplicates, and the endless promotions. That’s effectively how SIEM billing works today. Every log ingested and stored is billed at premium rates, even though only a fraction is truly security relevant. For enterprises, initial license fees might seem manageable or actually give value – but that's before rising data volumes push them into license overages, inflicting punishing cost and budget overruns on already strained SOCs.

SIEM costs can be upwards of a million dollars annually for ingesting their entire volume, leaving analysts spending nearly 30% of their time chasing low-value alerts arising out of rising data volumes. Some SOCs deal with the cost dimension by switching off noisy sources such as firewalls or EDRs/XDRs, but this leaves them vulnerable.

The tension is simple: you cannot stop collecting telemetry without creating blind spots, and you cannot keep paying for every byte without draining the security budget.

Our team, with decades of cybersecurity experience, has seen that pre-ingestion processing and tiering of data can significantly reduce volumes and save costs, while maintaining and even improving SOC security posture.

Key Drivers Behind Rising SIEM Costs

SIEM platforms have become indispensable, but their pricing and operating models haven’t kept pace with today’s data realities. Several forces combine to push costs higher year after year:

1. Exploding telemetry growth

Cloud adoption, SaaS proliferation, and IoT/endpoint sprawl have multiplied the volume of security data. Yesterday’s manageable gigabytes quickly become today’s terabytes.

2. Retention requirements

Regulations and internal policies force enterprises to keep logs for months or even years. Audit teams often require this data to stay in hot tiers, keeping storage costs high. Retrieval from archives adds another layer of expense.

3. Ingestion-based pricing

SIEM costs are still based on how much data you ingest and store. As log sources multiply across cloud, SaaS, IoT, and endpoints, every new gigabyte directly inflates the bill.

4. Low-value and noisy data

Heartbeats, debug traces, duplicates, and verbose fields consume budget without improving detections. Surveys suggest fewer than 40% of logs provide real investigative value, yet every log ingested is billed.

5. Search and rehydration costs

Investigating historical incidents often requires rehydrating archived data or scanning across large datasets. These searches are compute-intensive and can trigger additional fees, catching teams by surprise.

6. Hidden operational overhead

Beyond licensing, costs show up in infrastructure scaling, cross-cloud data movement, and wasted analyst hours chasing false positives. These indirect expenses compound the financial strain on security programs.

Why Traditional Fixes Fall Short

CISOs struggling to balance their budgets know that their SIEM costs add the most to the bill but have limited options to control it. They can tune retention policies, archive older data, or apply filters inside the SIEM. Each approach offers some relief, but none addresses the underlying problem.

Retention tuning

Shortening log retention from twelve months to six may lower license costs, but it creates other risks. Audit teams lose historical context, investigations become harder to complete, and compliance exposure grows. The savings often come at the expense of resilience.

Cold storage archiving

Moving logs out of hot tiers does reduce ingestion costs, but the trade-offs are real. When older data is needed for an investigation or audit, retrieval can be slow and often comes with additional compute or egress charges. What looked like savings up front can quickly be offset later.

Routing noisy sources away

Some teams attempt to save money by diverting particularly noisy telemetry, such as firewalls or DNS, away from the SIEM entirely. While this cuts ingestion, it also creates detection gaps. Critical events buried in that telemetry never reach the SOC, weakening security posture and increasing blind spots.

Native SIEM filters

Filtering noisy logs within the SIEM gives the impression of control, but by that stage the cost has already been incurred. Ingest-first, discard-later approaches simply mean paying premium rates for data you never use.

These measures chip away at SIEM costs but don’t solve the core issue: too much low-value, less-relevant data flows into the SIEM in the first place. Without controlling what enters the pipeline, security leaders are forced into trade-offs between cost, compliance, and visibility.

Data Pipeline Tools: The Missing Middle Layer

All the 'traditional fixes' sacrifice visibility for cost; but the real logical solution is to solve for relevance before ingestion. Not at a source level, and not static like a rule, but dynamically and in real-time. That is where a data pipeline tool comes in.

Data pipeline tools sit between log sources and destinations as an intelligent middle layer. Instead of pushing every event straight into the SIEM, data first passes through a pipeline that can filter, shape, enrich, and route it based on its value to detection, compliance, or investigation.

This model changes the economics of security data. High-value events stream into the SIEM where they drive real-time detections. Logs with lower investigative relevance are moved into low-cost storage, still available for audits or forensics. Sensitive records can be masked or enriched at ingestion to reduce compliance exposure and accelerate investigations.

In this way, data pipeline tools don’t eliminate data; it ensures each log goes to the right place at the right cost. Security leaders maintain full visibility while avoiding premium SIEM rcosts for telemetry that adds little detection value.

How Data Pipeline Tools Deliver SIEM Cost Reduction

Data pipeline tools lower SIEM costs and storage bills by aligning cost with value. Instead of paying premium rates to ingest every log, pipelines ensure each event goes to the right place at the right cost. The impact comes from a few key capabilities:

Pre-ingest filtering

Heartbeat messages, duplicate events, and verbose debug logs are removed before ingestion. Cutting noise at the edge reduces volume without losing investigative coverage.

Smart routing

High-value logs stream into the SIEM for real-time detection, while less relevant telemetry is archived in low-cost, compliant storage. Everything is retained, but only what matters consumes SIEM resources.

Enrichment at collection

Logs are enriched with context — such as user, asset, or location — before reaching the SIEM. This reduces downstream processing costs and accelerates investigations, since fewer raw events can still provide more insight.

Normalization and transformation

Standardizing logs into open schemas reduces parsing overhead, avoids vendor lock-in, and simplifies investigations across multiple tools.

Flexible retention

Critical data remains hot and searchable, while long-tail records are moved into cheaper storage tiers. Compliance is maintained without overspending.

Together, these practices make SIEM cost reduction achievable without sacrificing visibility. Every log is retained, but only the data that truly adds value consumes expensive SIEM resources.

The Business Impact of Modern Data Pipeline Tools

The financial savings from data pipeline tools are immediate, but the strategic impact is more important. Predictable budgets replace unpredictable cost spikes. Security teams regain control over where money is spent, ensuring that value rather than volume drives licensing decisions.

Operations also change. Analysts no longer burn hours triaging low-value alerts or stitching context from raw logs. With cleaner, enriched telemetry, investigations move faster, and teams can focus their energy on meaningful threats instead of noise.

Compliance obligations become easier to meet. Instead of keeping every log in costly hot tiers, organizations retain everything in the right place at the right cost — searchable when required, affordable at scale.

Perhaps most importantly, data pipeline tools create room to maneuver. By decoupling data pipelines from the SIEM itself, enterprises gain the flexibility to change vendors, add destinations, or scale to new environments without starting over. This agility becomes a competitive advantage in a market where security and data platforms evolve rapidly.

In this way, a data pipeline tool are more than a cost-saving measure. It is a foundation for operational resilience and strategic flexibility.

Future-Proofing the SOC with AI-Powered Data Pipeline Tools

Reducing SIEM costs is the immediate outcome of data pipeline tools, but its real value is in preparing security teams for the future. Telemetry will keep expanding, regulations will grow stricter, and AI will become central to detection and response. Without modern pipelines, these pressures only magnify existing challenges.

DataBahn was built with this future in mind. Its components ensure that security data isn’t just cheaper to manage, but structured, contextual, and ready for both human analysts and machine intelligence.

- Smart Edge acts as the collection layer, supporting both agent and agentless methods depending on the environment. This flexibility means enterprises can capture telemetry across cloud, on-prem, and OT systems without the sprawl of multiple collectors.

- Highway processes and routes data in motion, applying enrichment and normalization so downstream systems — SIEMs, data lakes, or storage — receive logs in the right format with the right context.

- Cruz automates data movement and transformation, tagging logs and ensuring they arrive in structured formats. For security teams, this means schema drift is managed seamlessly and AI systems receive consistent inputs without manual intervention.

- Reef, a contextual insight layer, turns telemetry into data that can be queried in natural language or analyzed by AI agents. This accelerates investigations and reduces reliance on dashboards or complex queries.

Together, these capabilities move security operations beyond cost control. They give enterprises the agility to scale, adopt AI, and stay compliant without being locked into a single tool or architecture. In this sense, a data pipeline management tool is not just about cutting SIEM costs; it’s about building an SOC that’s resilient and future-ready.

Cut SIEM Costs, Keep Visibility

For too long, security leaders have faced a frustrating paradox: cut SIEM ingestion to control costs and risk blind spots, or keep everything and pay rising bills to preserve visibility.

Data pipeline tools eliminate that trade-off by moving decisions upstream. You still collect every log, but relevance is decided before ingestion: high-value events flow into the SIEM, the rest land in low-cost, compliant stores. The same normalization and enrichment that lower licensing and storage also produce structured, contextual telemetry that speeds investigations and readies the SOC for AI-driven workflows. The outcome is simple: predictable spend, full visibility, and a pipeline built for what’s next.

The takeaway is clear: SIEM cost reduction and complete visibility are no longer at odds. With a data pipeline management tool, you can achieve both.

Ready to see how? Book a personalized demo with DataBahn and start reducing SIEM and storage costs without compromise.

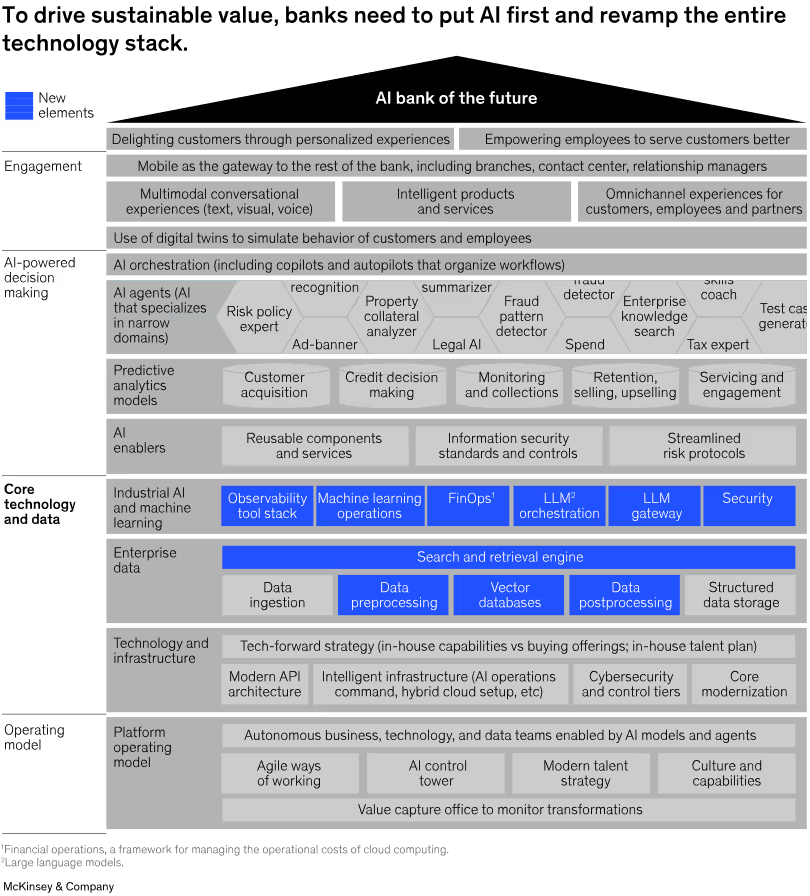

In their article about how banks can extract value from a new generation of AI technology, notable strategy and management consulting firm McKinsey identified AI-enabled data pipelines as an essential part of the ‘Core Technology and Data Layer’. They found this infrastructure to be necessary for AI transformation, as an important intermediary step in the evolution banks and financial institutions will have to make for them to see tangible results from their investments in AI.

The technology stack for the AI-powered banking of the future relies greatly on an increased focus on managing enterprise data better. McKinsey’s Financial Services Practice forecasts that with these tools, banks will have the capacity to harness AI and “… become more intelligent, efficient, and better able to achieve stronger financial performance.”

What McKinsey says

The promise of AI in banking

The authors point to increased adoption of AI across industries and organizations, but the depth of the adoption remains low and experimental. They express their vision of an AI-first bank, which -

- Reimagines the customer experience through personalization and streamlined, frictionless use across devices, for bank-owned platforms and partner ecosystems

- Leverages AI for decision-making, by building the architecture to generate real-time insights and translating them into output which addresses precise customer needs. (They could be talking about Reef)

- Modernizes core technology with automation and streamlined architecture to enable continuous, secure data exchange (and now, Cruz)

They recommend that banks and financial service enterprises set a bold vision for AI-powered transformation, and root the transformation in business value.

AI stack powered by multiagent systems

The true potential of AI will require banks of the future to tread beyond just AI models, the authors claim. With embedding AI into four capability layers as the goal, they identify ‘data and core tech’ as one of those four critical components. They have augmented an earlier AI capability stack, specifically adding data preprocessing, vector databases, and data post-processing to create an ‘enterprise data’ part of the ‘core technology and data layer’. They indicate that this layer would build a data-driven foundation for multiple AI agents to deliver customer engagement and enable AI-powered decision-making across various facets of a bank’s functioning.

Our perspective

Data quality is the single greatest predictor of LLM effectiveness today, and our current generation of AI tools are fundamentally wired to convert large volumes of data into patterns, insights, and intelligence. We believe the true value of enterprise AI lies in depth, where Agentic AI modules can speak and interact with each other while automating repetitive tasks and completing specific and niche workstreams and workflows. This is only possible when the AI modules have access to purposeful, meaningful, and contextual data to rely on.

We are already working with multiple banks and financial services institutions to enable data processing (pre and post), and our Cruz and Reef products are deployed in many financial institutions to become the backbone of their transformation into AI-first organizations.

Are you curious to see how you can come closer to building the data infrastructure of the future? Set up a call with our experts to see what’s possible when data is managed with intelligence.

In September 2022, cybercriminals accessed, encrypted, and stole a substantial amount of data from Suffolk County’s IT systems, which included personally identifiable information (PII) of county residents, employees, and retirees. Although Suffolk County did not pay the ransom demand of $2.5 million, it ultimately spent $25 million to address and remediate the impact of the attack.

Members of the county’s IT team reported receiving hundreds of alerts every day in the weeks leading up to the attack. Several months earlier, frustrated by the excessive number of unnecessary alerts, the team redirected the notifications from their tools to a Slack channel. Although the frequency and severity of the alerts increased leading up to the September breach, the constant stream of alerts wore the small team down, leaving them too exhausted to respond and distinguish false positives from relevant alerts. This situation created an opportunity for malicious actors to successfully circumvent security systems.

The alert fatigue problem

Today, cybersecurity teams are continually bombarded by alerts from security tools throughout the data lifecycle. Firewalls, XDRs/EDRs, and SIEMs are among the common tools that trigger these alerts. In 2020, Forrester reported that SOC teams received 11,000 alerts daily, and 55% of cloud security professionals admitted to missing critical alerts. Organizations cannot afford to ignore a single alert, yet alert fatigue (and an overwhelming number of unnecessary alerts) causes SOCs to miss up to 30% of security alerts that go uninvestigated or are completely overlooked.

While this creates a clear cybersecurity and business continuity problem, it also presents a pressing human issue. Alert fatigue leads to cognitive overload, emotional exhaustion, and disengagement, resulting in stress, mental health concerns, and attrition. More than half of cybersecurity professionals cite their workload as the primary source of stress, two-thirds reported experiencing burnout, and over 60% of cybersecurity professionals surveyed stated it contributed to staff turnover and talent loss.

Alert fatigue poses operational challenges, represents a critical security risk, and truly becomes an adversary of the most vital resource that enterprises rely on for their security — SOC professionals doing their utmost to combat cybercriminals. SOCs are spending so much time and effort triaging alerts and filtering false positives that there’s little room for creative threat hunting.

Data is the problem – and the solution

Alert fatigue is a result, not a root cause. When these security tools were initially developed, cybersecurity teams managed gigabytes of data each month from a limited number of computers on physically connected sites. Today, Security Operations Centers (SOCs) are tasked with handling security data from thousands of sources and devices worldwide, which arrive through numerous distinct devices in various formats. The developers of these devices did not intend to simplify the lives of security teams, and the tools they designed to identify patterns often resemble a fire alarm in a volcano. The more data that is sent as an input to these machines, the more likely they are to malfunction – further exhausting and overwhelming already stretched security teams.

Well-intentioned leaders advocate for improved triaging, the use of automation, refined rules to reduce false-positive rates, and the application of popular technologies like AI and ML. Until we can stop security tools from being overwhelmed by large volumes of unstructured, unrefined, and chaotic data from diverse sources and formats, these fixes will be band aids on a gaping wound.

The best way to address alert fatigue is to filter out the data being ingested into downstream security tools. Consolidate, correlate, parse, and normalize data before it enters your SIEM or UEBA. If it isn’t necessary, store it in blob storage. If it’s duplicated or irrelevant, discard it. Don’t clutter your SIEM with poor data so it doesn’t overwhelm your SOC with alerts no one requested.

How Databahn helps

At DataBahn, we help enterprises cut through cybersecurity noise with our security data pipeline solution, which works around the clock to:

1. Aggregates and normalizes data across tools and environments automatically

2. Uses AI-driven correlation and prioritization

3. Denoises the data going into the SIEM, ensuring more actionable alerts with full context

SOCs using DataBahn aren’t overwhelmed with alerts; they only see what’s relevant, allowing them to respond more quickly and effectively to threats. They are empowered to take a more strategic approach in managing operations, as their time isn’t wasted triaging and filtering out unnecessary alerts.

Organizations looking to safeguard their systems – and protect their SOC members – should shift from raw alert processing to smarter alert management, driven by an intelligent pipeline which combines automation, correlation, and transformation that filters out the noise and combats alert fatigue.

Interested in saving your SOC from alert fatigue? Contact DataBahn

In the past, we've written about how we solve this problem for Sentinel. You can read more here: AI-powered Sentinel Log Optimization

DataBahn recognized as leading vendor in SACR 2025 Security Data Pipeline Platforms Market Guide

As security operations become more complex and SOCs face increasingly sophisticated threats, the data layer has emerged as the critical foundation. SOC effectiveness now depends on the quality, relevance, and timeliness of data it processes; without a robust data layer, SIEM-based analytics, detection, and response automation crumble under the deluge of irrelevant data and unreliable insights.

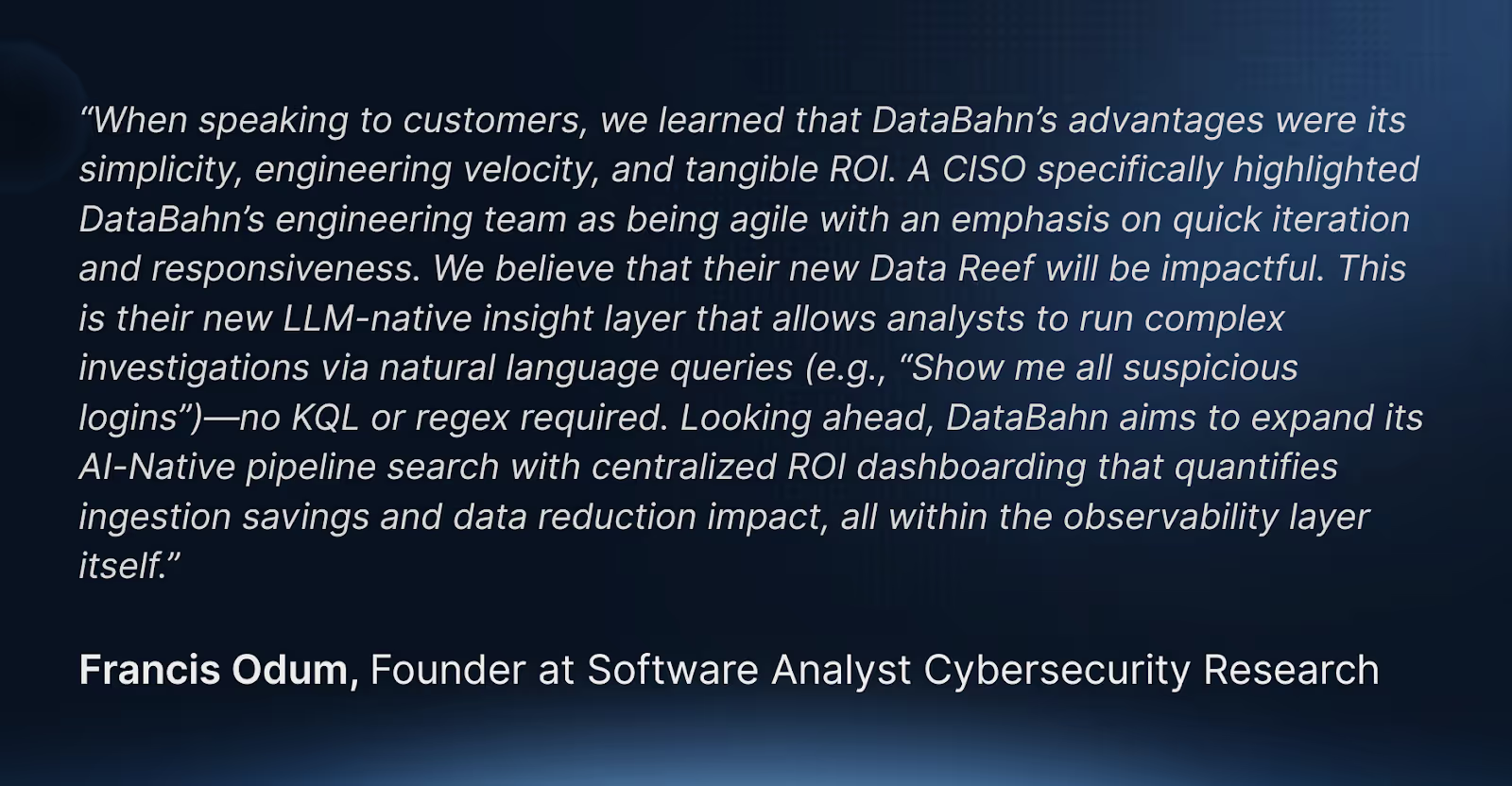

Recognizing the need to engage with current SIEM problems, security leaders are adopting a new breed of security data tools known as Security Data Pipeline Platforms. These platforms sit beneath the SIEM, acting as a control plane for ingesting, enriching, and routing security data in real time. In its 2025 Market Guide, SACR explores this fast-emerging category and names DataBahn among the vendors leading this shift.

Understanding Security Data Pipelines: A New Approach

The SACR report highlights this breaking point: organizations typically collect data from 40+ security tools, generating terabytes daily. This volume overwhelms legacy systems, creating three critical problems:

First, prohibitive costs force painful tradeoffs between security visibility and budget constraints. Second, analytics performance degrades as data volumes increase. Finally, security teams waste precious time managing infrastructure rather than investigating threats.

Fundamentally, security data pipeline platforms partially or fully resolve the data volume problems with differing outcomes and performance. DataBahn decouples collection from storage and analysis, automates and simplifies data collection, transformation, and routing. This architecture reduces costs while improving visibility and analytic capabilities—the exact opposite of the traditional, SIEM-based approach.

AI-Driven Intelligence: Beyond Basic Automation

The report examines how AI is reshaping security data pipelines. While many vendors claim AI capabilities, few have integrated intelligence throughout the entire data lifecycle.

DataBahn's approach embeds intelligence at every layer of the security data pipeline. Rather than simply automating existing processes, our AI continually optimizes the entire data journey—from collection to transformation to insight generation.

This intelligence layer represents a paradigm shift from reactive to proactive security, moving beyond "what happened?" to answering "what's happening now, and what should I do about it?"

Take threat detection as an example: traditional systems require analysts to create detection rules based on known patterns. DataBahn's AI continually learns from your environment, identifying anomalies and potential threats without predefined rules.

The DataBahn Platform: Engineered for Modern Security Demands

In an era where security data is both abundant and complex, DataBahn's platform stands out by offering intelligent, adaptable solutions that cater to the evolving needs of security teams.

Agentic AI for Security Data Engineering: Our agentic AI, Cruz, automates the heavy lifting across your data pipeline—from building connectors to orchestrating transformations. Its self-healing capabilities detect and resolve pipeline issues in real-time, minimizing downtime and maintaining operational efficiency.

Intelligent Data Routing and Cost Optimization: The platform evaluates telemetry data in real-time, directing only high-value data to cost-intensive destinations like SIEMs or data lakes. This targeted approach reduces storage and processing costs while preserving essential security insights.

Flexible SIEM Integration and Migration: DataBahn's decoupled architecture facilitates seamless integration with various SIEM solutions. This flexibility allows organizations to migrate between SIEM platforms without disrupting existing workflows or compromising data integrity.

Enterprise-Wide Coverage: Security, Observability, and IoT/OT: Beyond security data, DataBahn's platform supports observability, application, and IoT/OT telemetry, providing a unified solution for diverse data sources. With 400+ prebuilt connectors and a modular architecture, it meets the needs of global enterprises managing hybrid, cloud-native, and edge environments.

Next-Generation Security Analytics

One of DataBahn’s standout features highlighted by SACR is our newly launched "insights layer”—Reef. Reef transforms how security professionals interact with data through conversational AI. Instead of writing complex queries or building dashboards, analysts simply ask questions in natural language: "Show me failed login attempts for privileged users in the last 24 hours" or "Show me all suspicious logins in the last 7 days"

Critically, Reef decouples insight generation from traditional ingestion models, allowing Security analysts to interact directly with their data, gain context-rich insights without cumbersome queries or manual analysis. This significantly reduces the mean time to detection (MTTD) and response (MTTR), allowing teams to prioritize genuine threats quickly.

Moving Forward with Intelligent Security Data Management

DataBahn's inclusion in the SACR 2025 Market Guide affirms our position at the forefront of security data innovation. As threat environments grow more complex, the difference between security success and failure increasingly depends on how effectively organizations manage their data.

We invite you to download the complete SACR 2025 Market Guide to understand how security data pipeline platforms are reshaping the industry landscape. For a personalized discussion about transforming your security operations, schedule a demo with our team. Click here

In today's environment, your security data should work for you, not against you.

.avif)

.avif)

.avif)